Introducing xsAI, a < 6KB Vercel AI SDK alternative

by 藍+85CD, Neko Ayaka

4 min read

Why another AI SDK?

Vercel AI SDK is way too big, it includes unnecessary dependencies.

For example, Vercel AI SDK shipped with non-optional OpenTelemetry dependencies, and bind the user to use zod (you don't get to choose), and so much more...

This makes it hard to build small and decent AI applications & CLI tools with less bundle size and more controllable and atomic capabilities that user truly needed.

But, it doesn't need to be like this, isn't it?

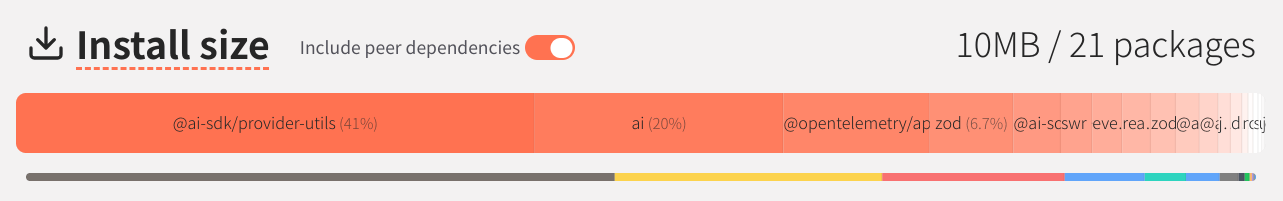

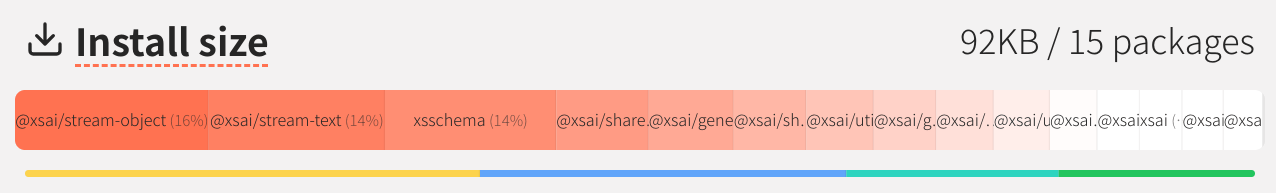

So how small is xsAI?

Without further ado, let's look:

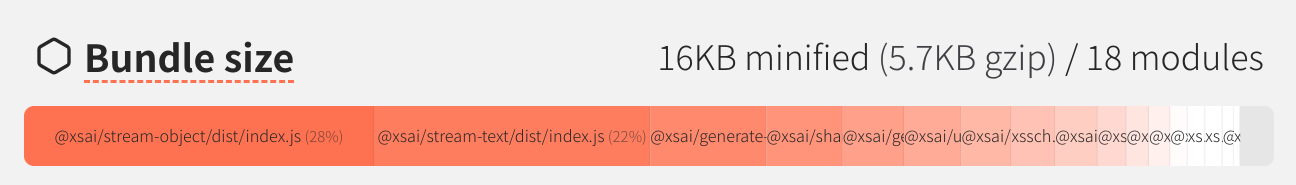

It's roughly a hundred times smaller than the Vercel AI SDK (*install size) and has most of its features.

Also it is 5.7KB gzipped, so the title is not wrong.

Getting started

You can install the xsai package, which contains all the core utils.

npm i xsai

Or install the corresponding packages separately according to the required features:

npm i @xsai/generate-text @xsai/embed @xsai/model

Generating Text

So let's start with some simple examples.

import { generateText } from '@xsai/generate-text'

import { env } from 'node:process'

const { text } = await generateText({

apiKey: env.OPENAI_API_KEY!,

baseURL: 'https://api.openai.com/v1/',

model: 'gpt-4o'

messages: [{

role: 'user',

content: 'Why is the sky blue?',

}],

})

xsAI does not use the provider function like Vercel does by default,

we simplified them into three shared fields: apiKey, baseURL and model.

apiKey: Provider API KeybaseURL: Provider Base URL (will be merged with the path of the corresponding util, e.g.new URL('chat/completions', 'https://api.openai.com/v1/'))model: Name of the model to use

Don't worry if you need to support non-OpenAI-compatible API provider, such as Claude, we left the possibilities to override

fetch(...)where you can customize how the request is made, and how the response was handled.

This allows xsAI to support any OpenAI-compatible API without having to create provider packages.

Generating Text w/ Tool Calling

Continuing with the example above, we now add the tools.

import { generateText } from '@xsai/generate-text'

import { tool } from '@xsai/tool'

import { env } from 'node:process'

import * as z from 'zod'

const weather = await tool({

name: 'weather',

description: 'Get the weather in a location',

parameters: z.object({

location: z.string().describe('The location to get the weather for'),

}),

execute: async ({ location }) => ({

location,

temperature: 72 + Math.floor(Math.random() * 21) - 10,

}),

})

const { text } = await generateText({

apiKey: env.OPENAI_API_KEY!,

baseURL: 'https://api.openai.com/v1/',

model: 'gpt-4o'

messages: [{

role: 'user',

content: 'What is the weather in San Francisco?',

}],

tools: [weather],

})

Wait, zod is not good for tree shaking and annoying. Can we use valibot? Of course!

import { tool } from '@xsai/tool'

import { description, object, pipe, string } from 'valibot'

const weather = await tool({

name: 'weather',

description: 'Get the weather in a location',

parameters: object({

location: pipe(

string(),

description('The location to get the weather for'),

),

}),

execute: async ({ location }) => ({

location,

temperature: 72 + Math.floor(Math.random() * 21) - 10,

}),

})

We can even use arktype, and the list of compatibility will grow in the future:

import { tool } from '@xsai/tool'

import { type } from 'arktype'

const weather = await tool({

name: 'weather',

description: 'Get the weather in a location',

parameters: type({

location: 'string',

}),

execute: async ({ location }) => ({

location,

temperature: 72 + Math.floor(Math.random() * 21) - 10,

}),

})

xsAI doesn't limit your choices into either

zod,valibot, orarktype, with the power of Standard Schema, you can use any schema library it supported you like.

Easy migration

Are you already using the Vercel AI SDK? Let's see how to migrate to xsAI:

- import { openai } from '@ai-sdk/openai'

- import { generateText, tool } from 'ai'

+ import { generateText, tool } from 'xsai'

+ import { env } from 'node:process'

import * as z from 'zod'

const { text } = await generateText({

+ apiKey: env.OPENAI_API_KEY!,

+ baseURL: 'https://api.openai.com/v1/',

- model: openai('gpt-4o')

+ model: 'gpt-4o'

messages: [{

role: 'user',

content: 'What is the weather in San Francisco?',

}],

- tools: {

+ tools: [

- weather: tool({

+ await tool({

+ name: 'weather',

description: 'Get the weather in a location',

parameters: z.object({

location: z.string().describe('The location to get the weather for'),

}),

execute: async ({ location }) => ({

location,

temperature: 72 + Math.floor(Math.random() * 21) - 10,

}),

})

- },

+ ],

})

That's it!

Next steps

Big fan of Anthropic's MCP?

We are working on Model Context Protocol support: #84

Don't like any of the cloud provider?

We are working on a 🤗 Transformers.js provider that enables you to directly run LLMs and any 🤗 Transformers.js supported models directly in browser, with the power of WebGPU!

You can track the progress here: #41. It is really cool and playful to run embedding, speech, and transcribing models directly in the browser, so, stay tuned!

Need framework bindings?

We will do this in v0.2. See you next time!

Documentation

Since this is just an introduction article, it only covers generate-text and tool.

xsai has more utils:

export * from '@xsai/embed'

export * from '@xsai/generate-object'

export * from '@xsai/generate-speech'

export * from '@xsai/generate-text'

export * from '@xsai/generate-transcription'

export * from '@xsai/model'

export * from '@xsai/shared-chat'

export * from '@xsai/stream-object'

export * from '@xsai/stream-text'

export * from '@xsai/tool'

export * from '@xsai/utils-chat'

export * from '@xsai/utils-stream'

If you are interested, go to the documentation at https://xsai.js.org/docs to get started!

Besides xsAI, we made loads of other cool stuff too! Check out our moeru-ai GitHub organization!

Join our Community

If you have questions about anything related to xsAI,

you're always welcome to ask our community on GitHub Discussions.